Tracking systems are improving the meeting experience for everyone. Paul Milligan looks at the different types of technology involved.

The thought of someone tracking your every movement or the sound of your voice may sound intrusive in everyday life, but in a meeting room, it’s increasingly welcome. Recent years have seen a rise in visual and voice tracking systems to enhance the experience for both in-room and remote participants.

Visual tracking follows body movements; voice tracking hones in on sound. Some companies will provide one of these, others will do both via a form of partnership with another manufacturer, and others will offer a complete voice/visual tracking system themselves. Others, such as Q-Sys will buy the technology from a specialist, as it did with its purchase of Seervision in summer 2023 and amalgamate it very successfully within their own platform. Across the board, AI plays a key role in automating tracking inside microphones and cameras.

We spoke with manufacturers to understand how these systems work and what integrators should be asking to match the right product to their clients’ needs. Jabra has become an established players with software that enables either visual or voice tracking in its videobars. James Spencer, video solutions director, EMEA North for Jabra explains: “The speaker tracking pans in on someone speaking. But there is also a layer of AI on top of that. For example, if there is a back and forth going on across the table, the camera detects that and pans out so you can see both parties, rather than shifting from one to the other. Our visual tracking works by face and torso detection. The AI is actually looking for those faces and torsos and then it can distinguish if that is a person within that room.”

Shure’s Microflex Advance array microphones use pickup lobes to locate talkers, sending that date to a connected system or device for further processing, such as camera tracking or voice lift. This system then uses the talker location information to adjust the audio capture and/or visual elements like camera focus.

Crestron’s ‘intelligent video’ technology stack combines different elements of both voice and visual tracking. Joel Mulpeter, senior director of product marketing, Crestron, explains: “It’s six core technologies including group framing, speaker tracking and presenter tracking, which we call foundational technologies that we expect cameras to do today. We add Intelligence Switching, which is a multi-camera approach, moving between cameras in the space. Visual AI helps us to centre people in the frame and detect what direction they’re looking in to make better camera decisions. The sixth technology is called Composition where we combine multiple shots into a single frame.”

There are multiple technologies available, and that list is growing by the day. Whilst not exhaustive, the examples outlined offer a quick summary of what’s happening in this sector.

If we delve into the technology itself, how do these systems know what voice (or body) to prioritise if multiple people are talking/moving in the room? “If we are using audio usually we are stacking the last person to speak in a priority order,” says Mulpeter, and you add touches like “personal priority” he adds. “You can assign personal priority, like giving a CEO precedence based on where they’re located. Otherwise it’s the last person to speak. We stack that in order and just keep rewriting that priority based on microphone data.”

In meetings we often show engagement by small verbal or visual gestures. So how can tracking systems tackle small phrases such as “ok” or “hmm”, or small movements of the hand, like scratching your nose or adjusting your glasses? Can it ignore them or do they become an ‘active’ speaker? “Things like ‘ok’ and ‘hmm’ will be picked up by Microsoft Teams in transcription mode, but it is up to the videobar within the room to distinguish the correct focus,” says Spencer. Crestron’s tracking systems use time to make that decision. “From an audio perspective, you need to speak for a certain amount of time, you need to pause for a certain amount of time. Those are customisable, we recommend that people should be speaking for around two seconds before you want to switch to them. From the visual standpoint, we don’t track little movements. It’s something we aspire to do one day, but so far we haven’t seen a good implementation of it that we think leads to a good meeting experience, if anything it seems to be more distracting because cameras are jiggling around,” says Mulpeter.

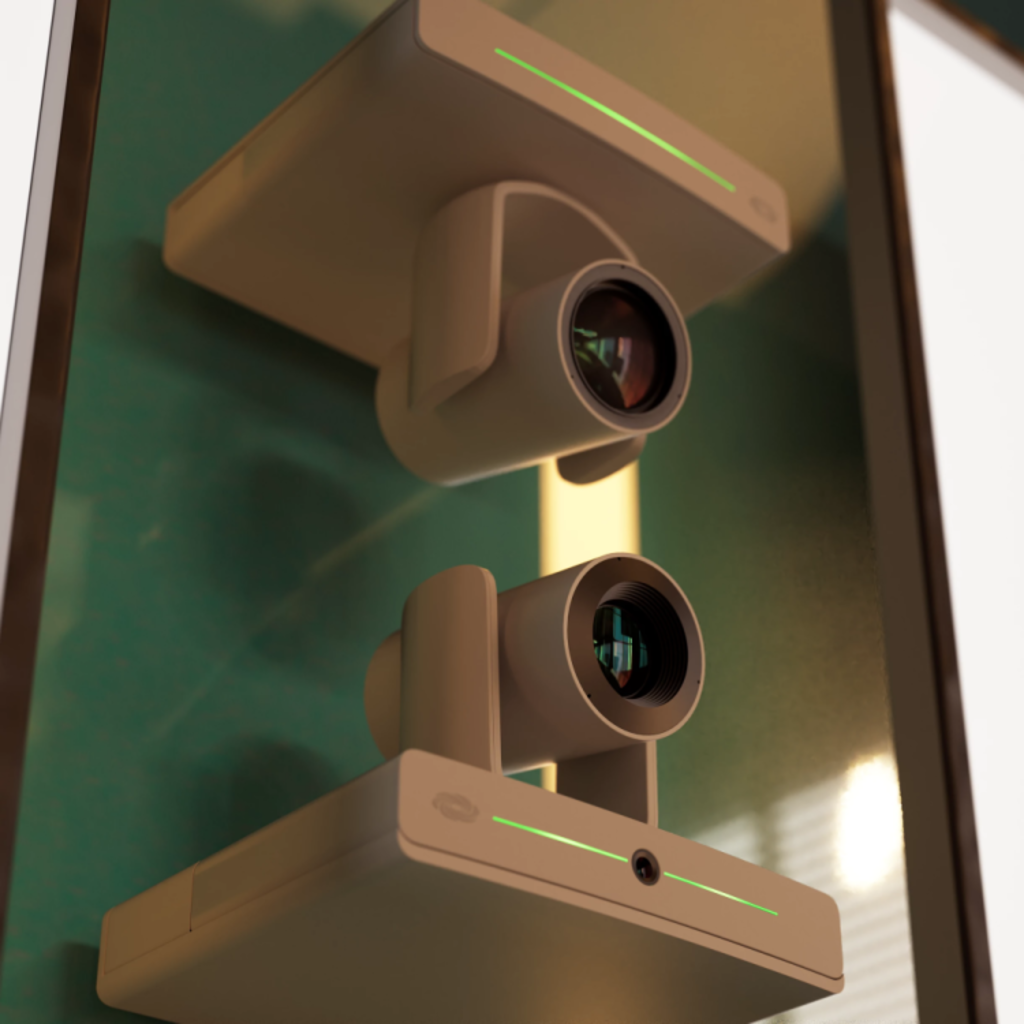

The popularity of PTZ cameras in medium-to-large rooms is tempered by concerns over excessive movement. PTZ cameras can cover a lot of ground by zooming in and out quickly, but too much zooming can cause fatigue amongst meeting participants, especially for those watching remotely. “The smaller the room, the more the cameras will move, and you are in danger of it becoming a virtual roller coaster, where you don’t know where you are or what you’re looking at, it gets a bit wild,” says Mulpeter.

If you’ve got a single camera which is constantly swinging from left to right, it’s not just fatigue, it causes seasickness, so it’s something we absolutely want to avoid,” says Kieron Seth, marketing director Europe for Lumens which employs a multi-camera system (a principal PTZ camera at the top, with a panoramic camera underneath). “You’re getting a clean switch from camera one to camera two,” says Seth. “You don’t see the swing at all.” Lumens’ VC-TR60A is a dual camera system with in-built voice tracking, with an array of microphones built into the camera, which are not there to pick up sound but to establish location. “The camera would hear me speaking and point towards me with the PTZ head. When you start speaking, we cut to the lower camera (the panoramic). The camera swings to you and then we cut back to the PTZ camera as soon as that shot is established, so we are doing everything we can to avoid seeing that swing,” Seth adds. This tech, and others, are clearly taking inspiration from the broadcast sector here (more on that later).

EPTZ (e is for electronic) cameras, that can digitally adjust field of view without the need for any mechanical components, help solve this. Sennheiser’s TeamConnect (TC) Bars use ePTZ technology to eliminate camera jerking and deliver faster but smooth panning (speeds can also be adjusted in the Sennheiser Control Cockpit software). PTZ fatigue can be overcome by introducing additional cameras to introduce transitioning shots says Inesh Patel, business development manager (Biz Com) for Sennheiser. “So no panning or zooming is seen at the far end but rather smooth transitions from one camera to the other once the panning has been done off air. We’re starting to see these features in laptop webcams too.”

So is tracking the new standard for meeting rooms? “These modes are built into most cameras today. It’s now about refining the experience,” says Spencer. There are other factors that make it feel inevitable too, as pointed out by Seth. “Going into a room with an installed Yamaha RMCG (ceiling array), we’ve completed setup of our system from nothing within 15 minutes, and that’s putting in a four-camera system and taking note of the design of the room so that the system knows where people are. It’s quick, and the advantages for the remote audience are huge, I can’t see any obstacles.”

AI is central to this progress says Patel, highlighting the camera features on Sennheiser’s TC Bars. “However, AV integrators would not feel comfortable in simply providing a complete AI solution,” he adds. “Therefore, it is important to allow the integrator and user to set their useable parameters such as speed of tracking, areas of tracking, and other useful user adjustable settings.”

Crestron uses AI in its Automate VX system, which used pre-trained models (edge AI as opposed to cloud AI) to avoid any security implications with video cameras in room training models. “In the latest release we have visual AI direction to take the orientation of someone’s face,” says Mulpeter. “In a meeting room I might be talking to the far end for example, and I have a camera face on, looking at me. But then someone asks a question on the other side of the room, and I turn that way. Suddenly what you’re getting on the far end is a shot at the back of someone’s head. Because it’s spatially aware we can make that conscious decision to say camera six in the back left corner actually has a more appropriate shot of that person at that time and then when they turn back again to the far end, we’ll make that exact same decision again.”

We have tried not to overcomplicate the AI inside says Seth. “If I’m in a room with a noisy road digger outside, the microphone is going to hear that noise, and without AI the camera would be thinking, ‘what are these noises?’ If that camera looks to see where the noise is coming, and it’s clearly not a human, it will simply ignore it. If you’ve got a room with a particularly weak partition with noise spilling over from the next room, it ignores it. If you’ve got a door slamming, it ignores it. It’s not the sexiest form of AI by long shot. What we’re doing is using it for basic questions - is this sound from a human?”

Isn’t the end goal of these tracking systems to create a ‘virtual director’, software that can emulate someone always choosing the best camera angles and mic positions? “We take our cues from TV production. At what point do we go to the wide shot of the room, to give an establishing shot or context, of the environment we’re in. That’s very much part of the programming tha t we’ve put into the camera. Corporate companies, charities, public sector are all content creators, they now need to up their

game because there’s so much competition online and the expectations of the audiences are so high, multi-camera switching is key,” says Seth.

To really emphasie this point, Jabra’s voice tracking mode is even called Virtual Director, “because that’s what it’s doing, it’s choosing what is the best angle? It’s recognising the need to centre that person in the frame and give them the best angle,” says Spencer. “We are absolutely heading towards a TV director style and making it as slick as possible.”

For integrators the right questions to ask are key to getting the best results. Patel suggests asking: “How many participants, in what sort of room size? Is the space always used in the same configuration with participants aways in the same positions or is the space more flexible and prone to change of orientation? Do you want to avoid seeing cameras panning on screen? Does the system need to meet Zoom/Teams official certification requirements?”

One of the big questions when it comes to integration is what backbone this is running on says Seth. “It can’t be you have to buy 30/40/50 metres of SDI cable or extended HDMI cables that cost a fortune and mean you’ve got to completely rip out the infrastructure of a room. How can we use existing LANs and existing Cat5 installations to make this installation go incredibly quickly, and not interfere with the normal running of a company?”

So far the transition to tracking systems has been smooth. As AI improves and ePTZ becomes more common, jerky camera motion will disappear. What we are left

with is what we the industry has been striving for since the pandemic, true meeting room equity for those in the room and those dialling in remotely. It almost seems too good to be true.